ASML engineer Buket Şahin has become the first person to get the ECP2 Silver certificate. For Şahin that’s just the side-effect of her passion for learning. She likes to dig into new fields, and became a better system engineer because of it.

When Buket Şahin was doing her bachelor’s degree in mechanical engineering in Istanbul, she joined the solar car and formula SAE teams of her university. A decision that quickly made her realise the limitations of her knowledge. It put her on a lifelong track to learn about fields different from her own.

“That’s when it all started”, Şahin recalls. “I saw how necessary it was to learn about other disciplines. Obviously, I knew the mechanical domain well, but suddenly I had to work with, for example, electrical engineers. I couldn’t understand what they were talking about, and I really wanted to.”

Şahin eventually graduated with a bachelor’s degree in mechanical engineering and a masters in mechatronics, besides doing an MBA. She first worked as a systems engineer in the Turkish defence industry, before making the transfer to ASML in 2012. She started by working in development & engineering on the NXT and NXE platforms. Currently she works as a product safety system engineer for the EUV machines of ASML.

During that journey, she persistently sought out new knowledge, taking a score of courses in fields such as electronics, optics and mechatronics. At the end of 2024 she became the person who achieved the first ECP2 Silver certificate. ECP2 is the European certified precision engineering course programme that emerged from a collaboration between euspen and DSPE. To receive the certificate she had to take 35 points worth of ECP2-certified courses.

“My goal wasn’t to achieve this certification”, she laughs. “But in the end it turned out I was the first one to get it.”

Helicopter view

Şahin’s position at ASML combines system engineering with a view on safety. “We are responsible for the whole EUV machine from a safety point of view”, she notes. “This includes internal and external alignment, overseeing the program and managing engineers and architects.”

The team in which she works contains up to hundreds of people, of which there is a core team of around fifteen system engineers. One of those is a safety specific system engineer role, such as the one she fulfils.

''I need to maintain a helicopter view, but also be able to dig into the parts.''

Taking that wider, systems, perspective, which combines different fields, is something she likes. It allows her to put into practice the different things she learned throughout her career. “I have broad interests”, says Şahin. “I like all kinds of sub-fields of science and engineering. In systems engineering I can pursue that curiosity. That’s also the reason why I like learning, and taking courses so much. As a system engineer you need to know a complex system, and the technical background of the parts. You need to be able to dig deeper into the design. You need to be able to dive into the different disciplines, but at the same time maintain a helicopter view. Maintaining that balance is something that I like very much.”

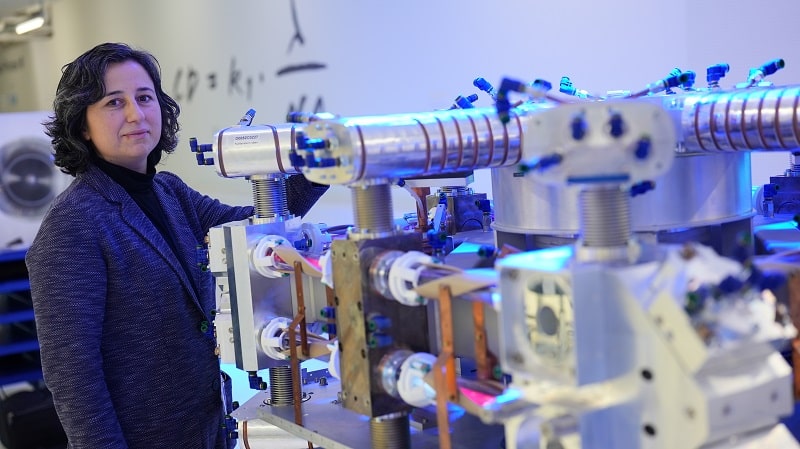

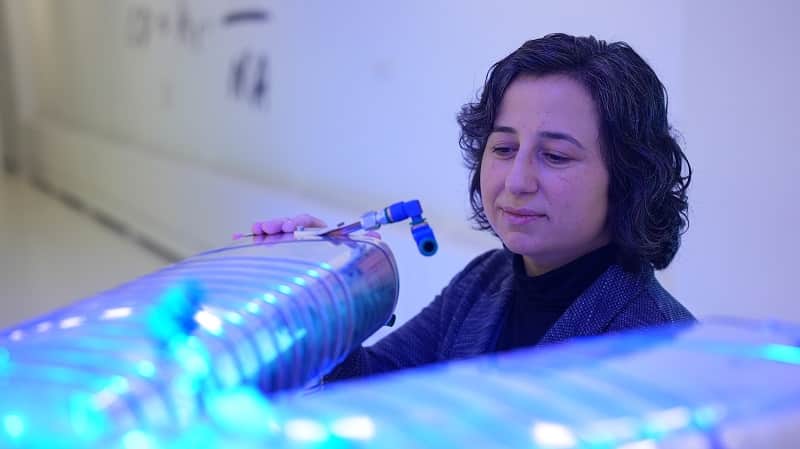

Buket Şahin at ASML’s experience center.

NASA handbook

Şahin started taking courses as soon as she landed at ASML. She realised that she should expand her knowledge beyond what her degrees had taught her. “They were very theoretical”, she admits. “They weren’t very applied. The research and development industry in Turkey isn’t as mature as it is in the Netherlands, particularly for semiconductors. In the Netherlands there’s a very good interaction between universities and industry. I wanted to gain that hands-on knowledge. So I started with courses in mechatronics and electronics. Then I wanted to learn about optics, a very relevant field when you work at ASML. I just continued from there.”

Curiosity is a driving force for Şahin. “Some courses I took because I needed the knowledge in my work, but others were out of curiosity. I wanted to develop myself and learn new things. The courses allowed me to do that.”

Interestingly, she didn’t take any courses on system engineering though. “I was mainly looking to gain a deeper knowledge in various technical disciplines”, she looks back. “My first job was as a system engineer, but the way the role is defined in different companies varies heavily. System engineers in the semiconductor industry require knowledge of the different sub-fields of the industry. An ASML machine is also very complex, so you really need to update what you know. Things can change fast, and you need to stay up to date. That’s why learning is such a big part of my career.”

She did learn how to be a system engineer within ASML, both by learning on-the-job, and by taking internal courses. “There are internal ASML system engineering trainings”, says Şahin. “That’s why I didn’t need external courses. Also, I learned the field from the NASA System Engineer Handbook back in Turkey. That’s also the methodology that ASML uses.”

Hands-on knowledge

When Şahin looks back on all the courses she took since she moved to the Netherlands, it’s the practical ones that stand out. “The most important thing I learned was applied knowledge”, she says. “Going to university taught me the theory, but it’s the day-to-day insights that are important. I particularly like it when courses teach you rules of thumb, pragmatic approaches and examples from the industry itself. That’s the key knowledge for me. It particularly helps when the instructors are from the industry, so they can show us what they worked on themselves.”

Since 2012, learning has also become easier. “When I started there weren’t as many learning structures to guide you. High Tech Institute today, for example, has an easy to access course list. In 2012, however, I had to do much more research, and courses weren’t advertised as much and they were even only in Dutch. I had to ask colleagues and find out for myself. If I had to start today, things would have been much easier.”

“If it helps you achieve your goal, it’s very easy to take courses when you’re working at ASML”, says Şahin.

At ASML they are happy about Şahin’s new certification, and the hunger she shows to learn new things. “My managers always supported me”, says Şahin. “We define development goals, and select the training that would achieve those targets. If it helps you achieve your goal, it’s very easy to take courses when you’re working at ASML.”

''Learning, however, is a goal in itself for me, whether it’s connected to my job or not.''

Şahin is, for now, far from done. For her the learning never stops. “I just started a masters programme at the KU Leuven. It’s an advanced master in safety engineering, and it’s connected to my position at ASML. My short-term goal is to complete this master. After that I want to continue my career here at ASML as a system engineer. Learning, however, is a goal in itself for me, whether it’s connected to my job or not.”

This article is written by Tom Cassauwers, freelancer at Bits&Chips.