During multiple meetings this week (online, obviously), the same challenge came up: companies and their customers are extremely poor at precisely defining what the desired outcome is that they’re looking to accomplish. At first blush, every person that I meet claims to know exactly what he or she is looking to achieve, but when push comes to shove and the individual is asked to define this more precisely, the lack of specificity rapidly starts to become apparent.

This is, of course, far from the first time that I’m exposed to this and the interesting thing is that there’s a variety of words that companies will use to cover up the lack of specificity. Words like customer value, quality, speed, reliable and robust are often used as generic terms used to look good but that prove to be void of any real meaning when investigated in more depth.

'Not being clear on your desired outcome causes all kinds of problems'

The challenge is that not being clear on your desired outcome causes all kinds of problems for organizations. One is that prioritization in the company tends to be driven by the loudest customer. Customers tend to create a lot of noise about something that they happen to care about at the moment, especially if it’s not working well. The consequence is that they contact the company with messages of deteriorating quality. If you haven’t defined what you mean with quality and you don’t have metrics to follow up what the current level is, it’s very easy to simply go along with the customer opinion and allocate significant resources to addressing this potential quality problem. The opportunity cost of this is enormous as the proclaimed problem might be a minor thing and it might well be that using the resources elsewhere would have been much better for the company.

A lack of precise definition of desired outcomes creates several issues inside the organization as well. First, it may easily lead to teams whose efforts are mutually conflicting due to different interpretations of what success looks like. For instance, teams looking to improve customer value by developing a simpler, more intuitive user interface will run into conflict with those that seek to improve customer value by developing additional features or more configuration and variants of existing features. The latter will need UI real estate to expose the user to the additional functionality and options that will easily undo the efforts of the first.

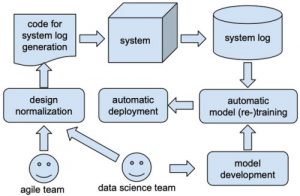

Another aspect, which I’ve written about before, is the inefficiency of development that’s not grounded in precisely, or rather quantitatively, defined customer value. For a company allocating 5 percent of its revenue to R&D and an average FTE cost of 120 k€/year, an agile team of 8 people working in 3-week sprints costs around 60 k€ per sprint but has to generate 1.2 M€ (60 k€/0.05) in business value per sprint. Vague statements about quality, speed, reliability and value do not help organizations to accomplish this outcome.

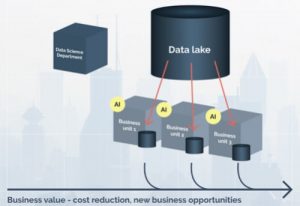

Concluding, many organizations fall short in precisely defining the desired outcome for initiatives, projects, products, and so on. Resources are allocated because of loud customers, historical patterns, ingrained behaviors or internal politics, not because these resources are providing the highest RoI for the company. In our research, we work with companies on value modeling to overcome these challenges and help them get on the way of precisely and quantitatively defining desired outcomes. Contact me if you want to know more.