High-precision mechatronics is one of the strengths of the region. To maximize the system performance, it is crucial to have a good metrology and calibration strategy. “Think ahead,” advises Rens Henselmans, teacher at High Tech Institute. “And beware what is really needed.”

Suppose you want to build a machine that can drill a hole in a piece of metal. The holes have to be drilled with such a level of accuracy that, once drilled, two separate pieces will fit perfectly together and can be connected with a dowel. What would that machine look like? And how will you reach the required precision? When you drill both holes slightly skewed in the same way, the pin will probably still fit. But if the deviation is not the same from one piece to another, you are screwed. And what if you place two drilling machines next to each other and combine their outputs; what will be the requirements then? Or more extreme, what if you buy the first part in China and the second in the US, what measures are necessary to ensure the dowel fits?

Even in an example as simple as drilling a hole, it turns out that it isn’t at all trivial to reach a superhigh level of accuracy. Parameters such as measurement uncertainty, reproducibility and traceability must be well defined. If you haven’t mastered that as a system designer, you can forget about accuracy.

The term accuracy is often misused, says Rens Henselmans, CTO of Dutch United Instruments and teacher at High Tech Institute. “It is a qualitative concept: something is accurate or not. But there is no number attached to it,” he explains. That in itself is not a bad thing, he has experienced, “as long as everyone knows what is meant. Usually, it concerns the measurement uncertainty. That is, a certain value plus or minus one standard deviation.”

Rens Henselmans: ‘You can’t add calibration in your system afterwards.’

The meter

Reproducibility is often mixed-up with repeatability. The latter term describes the variation that occurs when you repeat processes under exactly the same conditions. “Same weather, same time of day, same history,” says Henselmans, summing up the list of boundary conditions. “Reproducibility is the same variation, but under variable conditions, such as a different operator or even a different location. It is the harder version of repeatability since more factors are in play.” However, that system requirement is essential. “Without reproducible behavior, you have nothing,” declares Henselmans. “If your machine doesn’t always do the same thing, you can’t correct or calibrate system errors. Reproducibility is the lowest limit of what your machine will ever be able to do, if you could calibrate the systematic errors perfectly.”

Then traceability. “Internationally, we have made agreements about the exact length of a meter,” says Henselmans. “At the Dutch measurement institute NMI, they have a derivative of this, and every calibration company has a derivative of that. The deeper you get into the chain, the greater the deviation from the true standard and therefore the greater the uncertainty. When you present a measurement with an uncertainty, you should actually indicate how the uncertainties of all parts in the chain can be traced back to that one primary standard. Very simple, but it is often forgotten when talking about accuracy.”

Fortunately, that is not always necessary. “When you describe a wafer, it doesn’t matter at all whether or not the diameter of that wafer is exactly 300 mm,” says Henselmans. “The challenge is to get the patterns neatly aligned. And even if the pattern is slightly distorted, it’s not disastrous, as long as that distortion is the same in every layer. It only gets tricky when you want to do the next exposure on a different machine, or even on a system from another manufacturer. Then they must at least all have the same deviation. Gradually, you come to the point that you want to track everything back to the same reference and thus ultimately to the meter of the NMI.”

Common sense

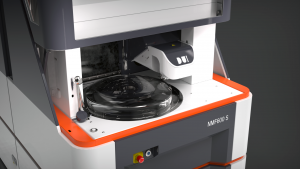

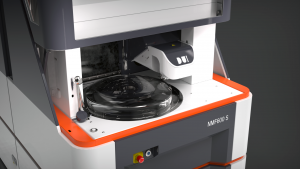

What is really needed, depends strongly on the application and on the budget you are given as a designer. “Technicians are prone to want too much and to show that they can meet challenging requirements. But that often makes their design too expensive,” warns Henselmans. His company, Dutch United Instruments, is developing a machine to measure the shape of aspherical and free-form optics, based on his PhD research from 2009. “At the start of that project, we wanted to achieve a measurement uncertainty of 30 nanometers in three directions. At some point, the penny dropped. Optical surfaces are always smooth and undulating. If you measure perpendicular to the surface with an optical sensor, an inaccuracy in that direction is a one-to-one measurement error. That’s where nanometer precision is really needed. But parallel to the surface, you don’t measure dramatical differences. Laterally, micrometers suffice. That insight suddenly made the problem two-dimensional instead of three-dimensional.”

During the training, Henselmans regularly uses the optics measuring machine from his own company, Dutch United Instruments, as an example.

So always use common sense when thinking about accuracy. “It is okay to deviate from the rules, as long as you know what you are doing,” says Henselmans. The required knowledge comes with experience. “You learn a lot from good and bad examples.” That is why Henselmans uses many practical examples during the training ‘Metrology and calibration of mechatronic systems’ at High Tech Institute, including his own optics measuring machine and a pick-and-place machine. “We do a lot of exercises and calculations with hidden pitfalls so participants can learn from their own mistakes.”

Abbe

As for the metrology in your machine, you have to think carefully about where to place the sensors. “Think of a caliper,” says Henselmans. “The scaling there is not aligned with the actual measurement. So, if you press hard on those beaks, they tilt them a bit and you get a different result. This effect occurs in almost all systems, even in the most advanced coordinate measuring equipment. Between the probe and the ruler in those machines you’ll find all kinds of components and axes that can influence the measurement.”

Bringing awareness to these effects is what Henselmans calls one of the most important lessons of the training. “It comprises the complete measurement loop with all elements that contribute to the total error budget,” he explains. Generally speaking, you want to keep that loop small and bring the sensor as close to the actual measurement as possible. “Unfortunately, there is often a machine part or a product in the way which makes it difficult to comply with that Abbe principle. Also, you should realize that you are not alone in the world. The metrologist might indeed prefer short distances to achieve the highest accuracy according to the Abbe principle. The dynamics engineer, however, would prefer to measure in line with the center of gravity, otherwise all kinds of swings will disrupt his control loops. The metrologist will argue that these oscillations are interesting precisely because they influence system behavior. Together, they have to find the right balance.”

Making that decision is one of the discussion points in the course. One important aspect of this discussion is the need to have sufficient knowledge of the various sensors, and their advantages and disadvantages. During the training, interferometers, encoders and vision technology, among others, are therefore explained by specialists.

Reversed spirit level

Once you’ve got the metrology and reproducibility in your system in order, it’s time for calibration. “To correct for systematic errors,” Henselmans clarifies. The second half of the training is about how to do that. “The lesson to be learned is that you can’t add calibration in your system afterwards. You have to consider in advance how you are going to carry out the calibration and where you need which sensors and reference objects. If you wait until the end of your design process, you surely won’t be able to fit them in anymore.”

Before you have painted yourself into the corner, you must have a list of error sources, which ones you need to calibrate and especially how you are going to do that. Henselmans: “During my time at TNO, we once made a proposal for an instrument to measure satellites. A system about a cubic meter in size. We could test that in our own vacuum chamber. We had already set up all kinds of test scenarios when one of the optical engineers pointed out that you had to do a certain measurement at a distance of about seven meters, since that was where the focal point lay. So we had to carry out the calibration in a special chamber at a specialized company in Germany, which costed thousands of euros per day. It’s nice that we found this out before we sent our offer to the client.”

There are certainly calibration tools and reference objects available on the market, but in Henselmans experience you get stuck pretty quickly. “Certainly for larger objects, the list of options dries up quickly,” he says. Designers then have to fall back on ingenious tricks like reversal. “A wonderfully beautiful and simple concept,” says Henselmans and he explains: “Think of a spirit level. You can hold it against a door frame to determine how skewed it is. Then turn the spirit level over and see if the bubble is now exactly on the other side of the center. If not, the vial is apparently not properly aligned within the spirit level. You then have two measurements, so two equations with two unknowns which means you can calibrate the offset of the spirit level and the door at the same time. You can use that trick in more complicated situations, with more degrees of freedom and nanometer accuracy. That means you can get much further than with tools available commercially.”

Even better is to incorporate this technique in your design so that the machine can calibrate itself. “Make it part of the process of your machine,” advises Henselmans. “Then the stability requirement of the system drops drastically, and the system design becomes much simpler.”

This article is written by Alexander Pil, tech editor of High-Tech Systems.

Recommendation by former participants

By the end of the training participants are asked to fill out an evaluation form. To the question: 'Would you recommend this training to others?' they responded with a 8.7 out of 10.