As a project manager, system architect and crisis manager in the high-tech industry, Luud Engels has a reputation for not mincing words. In addition to his consultancy work, he recently started as a system architect(ing) trainer at High Tech Institute. “Clear communication is key in complex development environments.”

You don’t want to start with Luud Engels about how open-minded and communicative we are in the Dutch high tech as a system architect. He’ll be forceful in his response, underlining just how hypocritical it is to believe that. “Here in the Brabant region, we’re not that open at all. Just stand at a coffee machine and listen. We’re not talking with you, we’re talking about you.”

When it comes to direct communication – or rather, confrontation – Engels has a reputation. A few months ago, he was sent packing after strongly expressing – according to his client – what was wrong within the company. “I’m convinced that at the right time, you can say anything to anyone – be it in a team meeting or a discussion between two people. Of course, most Dutch don’t do that. But I don’t seem to excel at it either because I sometimes put things so bluntly that people tell me to get lost.”

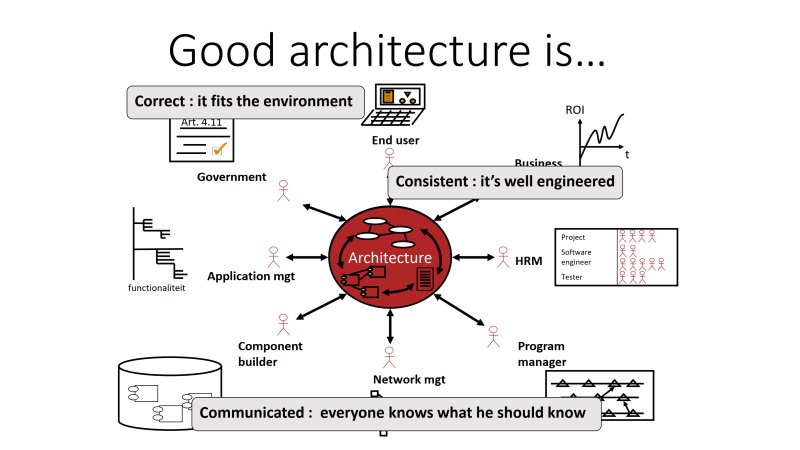

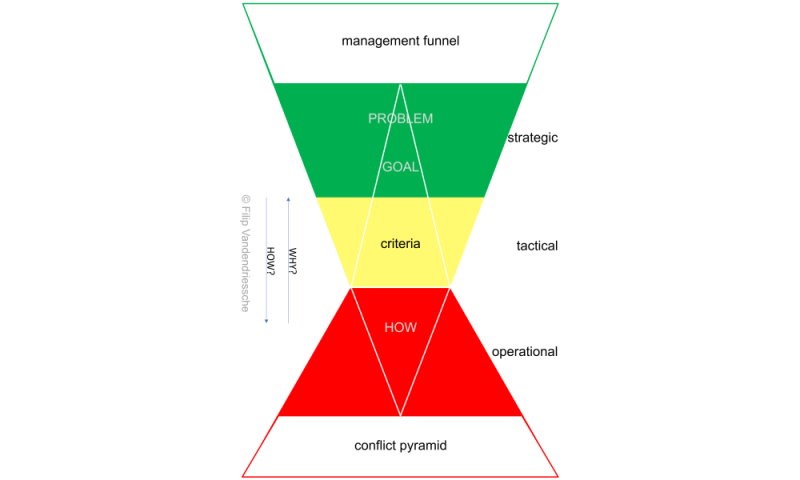

Engels’ appreciation of factual and clear communication comes from his many years of experience as a project manager, a system architect, a crisis manager and a member of the management team at engineering firm TMC. His advice for development environments: “Speak your mind. Also, about personal stuff. It’s perfectly fine to tell someone his blue shirt bothers you. But statements like ‘Microsoft sucks and Apple is good’ don’t help. Make it factual: are we going to work object-oriented or process-oriented? Are we going to use glass or titanium? What are the advantages? What are the disadvantages? Talking about glass, I don’t need to know the whole history of glassworks. I want the five key criteria – in numbers, not in positives and negatives. If you know the dominant parameters, you also know how to measure them and we can agree on the first development steps to make the measurements possible.”

Engels emphasizes that in the development of high-tech systems, several roads lead to Rome and that it’s important to stick to the choice made. “Make sure the whole team is at least on the same path, rather than endlessly searching for the only right solution – which, by definition, doesn’t exist.”

But sometimes, even the simplest of things can go wrong. “Once, after a positive conversation with a client, I received the report in colloquial Dutch. I asked if the client representatives had approved the text. Of course, they had not. So I insisted on writing it down in English, presenting it to the client and asking them for their approval. After all, it’s often about decisions with far-reaching consequences. Still, syncing with the customer proved a daunting task.”

The laws of Luud

- If the financial people take over, the engineering interest becomes secondary; if the engineers take the lead, it will be financially broken

(About balancing tech and money in high-tech OEMs)

- The client who asks for a crisis to be averted is half the culprit or part of the crisis in question (About crisis management)

- I firmly believe in the power of the outsider (About the crisis manager)

- We talk past each other: one talks in Newtons per square meter, the other in bits per second (About communication and collaboration in high tech)

- A crisis doesn’t go away by getting rid of the people who put their finger on the sore spots (About stranded development projects)

The outsider

Engels’ extensive technical career started with a study of electrical engineering, after which he joined Sattcontrol, a Swedish industrial automation specialist. He programmed PLCs for egg-grading machines, dairy factories and automated warehouses. Later, he switched to Fortran for PDP and Vax minicomputers.

After five years, Engels moved to Cap Volmac (later Cap Gemini), where he did projects. While he mainly worked in engineering, Cap’s core was business automation. “I learned a great deal about developing computer systems and software according to the rules.”

Engels started for Cap at ASML, he then worked on highway signaling at the Dutch Department of Waterways and Public Works, eventually taking on leadership roles. Later, audits were added to the mix. He estimates that he’s assessed about twenty projects. “After a day of walking around, you know what’s going on and where the project went wrong,” he says. Smilingly: “And certainly not because I’m so smart, or because I saw so much, but mainly because I was an outsider.”

Engels firmly believes in the power of the outsider. “You arrive at companies where things have gone completely wrong and then you’re allowed to walk around and speak to 5-10 people. They all have an opinion about the project in crisis. You get to hear the whole story. People want to pour their hearts out. You hear what’s wrong, and above all: what others aren’t allowed to say.”

The headstrong technician

Technicians are a stubborn, headstrong type – and Engels should know, as he certainly fits that mold. “We’re engineers, aren’t we? We think like this: ‘I’m an electrical engineer and according to my calculations, it’s 5 volts. If you don’t get it, I’ll explain again, but the outcome remains 5 volts. You’re crazy, not me.’ While in projects, it’s mainly about effective collaboration. That’s the difficult part. One talks in newtons per square meter, the other in bits per second. One talks about the goal, the other about the solution. The high tech is one big Tower of Babel. That starts with requirements and continues through to design, integration and testing. Just as well: if I do a project myself and an outsider comes in, he or she will also shoot holes in it.”

Luud Engels will lead the mid-November edition of the System Architect (Sysarch) training in Leuven.(Belgium).

Engels prefers to step in when the crisis is at its deepest. Take the Fusion project that ran at Philips at the end of the nineties. Its ambitious goal was to use a single platform to cover the mechanical, electrical and software construction for medical diagnostic systems. The idea was that cost savings through reuse would justify the extensive operation. “The director outlined his problem as follows: every month, thirty new developers joined the project and every month, they told him that completion was delayed for another two months.”

Engels, again, applied the power of the outsider. “The outsider is allowed to speak up. The deeper the crisis, the more receptive one is to outside messages. Usually, other people have already had a look at it. But often, they put their fingers on sore spots that they weren’t allowed to point to and ended up having to leave. They asked me to replace the current project leader because he couldn’t make up for the delay. But a crisis doesn’t go away when you get rid of the people who put their finger on the sore spots. Instead, I went to help the incumbent project leader. Together, we contained the crisis by adjusting the scope and working with early feedback. One of my laws is: the client who asks for a crisis to be averted is half the culprit or has at least a dominant part in it.”

Is it tunnel vision?

“Please note: you’re talking about very competent people with very relevant arguments and tons of knowledge. But gradually, the solution or working method has been placed in different silos. Very skilled people wear down paths, creating trenches that are so deep that you can barely look over the edge. Everyone has his trench and is defending it stubbornly. You hear people say things like: ‘This isn’t negotiable!’ When you hear that, it points you to where it went wrong and where a possible beginning of the solution lies.”

Where does the solution start?

“The first law of crisis management is containment. With Fusion, it meant that they had to stop adding thirty people per month. Instead, they had to cut twenty a month and reduce scope. The deeper cause – in my opinion – was pure self-overestimation. The platform idea for software alone is a major challenge. But when you start including mechanics and electronics, for all diagnostic products, it becomes too much at once. It’s difficult enough to develop electronics, software and mechanics together for a single system, but trying to develop one platform for different product lines in one project is naive, to say the least. At the time, they also had to work with developers in Bangalore, and they wanted to go from CCM level 2 to level 3 at the same time. That had to stop right away. You need to limit the scope of a project in crisis and postpone long-term improvement initiatives.”

“It’s often the case that the technicians already know what’s wrong and so does management. Both are right, but they won’t reach a solution together. Much later, I did a job at Philips DPS, where I saw that Philips had made significant progress. Putting fingers on sore spots, however, was still not allowed, unfortunately.”

How does this get done the right way?

Start small, says Engels. “You need early feedback, preferably a launching customer. I’ve heard Martin van den Brink say it many times at ASML: put everything together, show me that it works. Then he challenges people by stating: ‘Your physics don’t work.’ There was a lot of that during early integration. Much later, the industry introduced fancy words for it, calling it Scrum, Agile and rapid development. But the point is that you need feedback, and it’s important to start getting it at an early stage. The goal has to be to deliver every six weeks and to deliver something that actually works. If not, you have the means available to find out why it failed, why the physics didn’t work. At that point, you might have to accept that you’re not going to meet your deadline. What you definitely shouldn’t do is bring in more people.”

“When technicians tell you they need more time to investigate something, you have to get suspicious. Van den Brink is also a master at assessing or challenging that.”

Another necessity: “Make people owners of a problem. Certainly in environments with complex developments, where there’s not even a beginning of a solution and new inventions are required, everyone feels like the master of their idea, with their personal insight. We Dutch are also very good at seizing every opportunity to talk about this in a very broad sense. But you simply need to take the next step. That’s the only thing from which a project benefits. So if you’re sitting in a room with thirty people and problems come up, the project manager, the crisis manager or the system architect must assign a person responsible to each problem. This also includes deadlines for results and decisions.”

According to Engels, it’s definitely in the culture of ASML, but there was a point in time when it got out of hand there. “They appointed an owner for everything and called him a project leader. McKinsey once did an analysis at ASML of project leaders and project sizes. They found that, on average, there were 1.2 people on each project, including the project leader! Then you run the risk that these owners, these project leaders, start competing over available resources and the underlying issue disappears into the background.”

Engels has extensive experience as a project manager, system architect and crisis manager in the high-tech industry.

The product manager defines the product that will perform well in the market. He determines the available budget – often too little – and negotiates with the system architect whether it can be made for that money. Engels: “It’s a balancing act. With mature products, it works differently, but with a first development, you want a proof of concept as soon as possible. Or at least a confirmation that your ideas are right and that you’re on the right track.”

To what extent should the system architect, like the product manager, talk directly to customers?

“In high tech, that’s beyond dispute. That’s where the product manager and the system architect come together. They have to. The former has more business focus, the latter looks at the technology and whether it’s feasible. They’re two sides of the same coin. This collaboration between the product manager and system architect is becoming more and more commonplace. However, I still see system architects who downplay the necessary coordination with the project manager or operational management. You then run the risk that a solution that perfectly meets market needs will ultimately fail in the realization phase.”

In smaller development projects, with ten to twenty developers, one person can take on the role of both project manager and system architect. In larger projects, with tens or hundreds of developers and several dozen suppliers, it’s important to split up. Engels has experience in both roles. “The project manager sets hard deadlines and a system architect has to work with them.”

“The project manager must define which issues the system architect still has to solve and with whom. Together, you discuss the ins and outs, weigh the benefits and concerns, decide on key parameters, and then the project manager calls the system architect: at the end of next week, we’ll make a decision! It’s all about direction, coming up with a format that involves knowledgeable people to arrive at quantified statements with which you can really make an assessment.”

A system architect has a major impact on product development, yet often has a less than visible role.

“He’s an experienced technician, but his value lies primarily in his view of the business. Ninety-nine times out of a hundred, the system architect knows the market in which his product or system is going to land. This is necessary to translate the market and product requirements into the system requirements and then outline the design.”

It takes quite a bit of experience to reach that level. At the same time, Engels observes that the concept of a system architect is subject to inflation. “Nowadays, there are architects all over the place. A software architect is usually a senior software developer, a requirements engineer or someone in charge of engineering. I wouldn’t say anything to the detriment of such a lead engineer. Still, the difference with the system architect is that the latter has to know the business, understand how value is generated and thus understand why it has to be done within a certain amount of time and money.”

“This is also the case in construction. Your architect asks you what you are going to do with your future house and adapts his design accordingly. Are you going to cook a lot, or do you mainly want to drink wine? That’s why Van den Brink does so well at ASML. He goes to customers and explains what kind of litho systems they need. He knows the market like no other. Even stronger, he dictates the market. That means he understands the goals and the timing of chip manufacturers like no other, including what their production processes look like. If they talk about critical dimension and overlay, he can explain that his machine can do that and also substantiate why.”

This article is written by René Raaijmakers, tech editor of Bits&Chips.

Recommendation by former participants

By the end of the training participants are asked to fill out an evaluation form. To the question: 'Would you recommend this training to others?' they responded with a 8.5 out of 10.