When Johan Oedzes embarked on the Embedded Linux course at High Tech Institute, he wasn’t an absolute novice in the topic. However, reflecting on his journey, he confides, “I regret not taking the course earlier.”

“The combination of software and electronics has always piqued my interest due to the interaction with the tangible world,” says Johan Oedzes. This interest led him to the University of Twente, where he completed his Bachelor’s degree in electrical engineering and subsequently delved deeper into the field with a Master’s in embedded systems.

After graduating, Oedzes secured a position at a big company in Hengelo, focusing on C++ software engineering on Linux, albeit not in the embedded sense, he explains. “Although I learned a lot there, it started to bother me that I wasn’t working on embedded systems. I also felt that I was operating within the constraints that other people had thought out. I wanted to do the innovative and exploratory part of engineering too.”

One of his more experienced colleagues shared Oedzes’ sentiment and moved to Beeliners, based in Hengelo as well. The two kept in touch and his ex-colleague asked Oedzes whether he could share his contact information with the company’s owners. When commercial director Dennis Wissink called two years ago, Oedzes decided to take the plunge and he joined Beeliners as an embedded software engineer.

E-mobility

Beeliners immediately resonated with Oedzes’ interests, he says. “All our products combine a hardware design with embedded software engineering to create a prototype or deliver a proof of concept for our clients. Such projects may encompass compact medical appliances, intelligent gym equipment or innovative e-mobility devices. I’m currently working on a product in the e-mobility sector.”

One of Beeliners’ clients wasn’t satisfied with an externally sourced e-mobility control unit and approached the company for a solution. Upon the client’s request, Beeliners embarked on the venture of creating their own. The control unit links to two external systems: the e-mobility device on the one hand and the internet on the other. The internet connection enables communication with a backend server and reception of firmware updates.

“We separated the system into two parts,” Oedzes explains. “Everything that needs real-time behavior and has strict timing requirements runs on a subsystem with a microcontroller, interfacing with the e-mobility device. The code for the backend connection, the web interface and the product’s business logic run on an embedded Linux system with a C++ application.”

''Jasper asked me the right questions, like: what problem are you trying to solve, what threats do you want to protect against, is your web interface externally accessible? It’s actually all quite logical, but I learned a lot by reasoning about our product with him''

Flexibility

Despite having some experience in using embedded Linux systems from his studies at the university, setting one up was uncharted territory for Oedzes. It was during the e-mobility project at Beeliners that he self-educated and successfully created a tailored embedded Linux system based on the Yocto project. “You can find a lot of information about tools to create embedded Linux distributions, such as Yocto and Buildroot. It took some searching and experimenting, but eventually, we had a working system, even including functionality for remote updates.”

“At that time, Yocto felt like the most widely accepted solution. Renowned companies working on embedded Linux were using it and many software providers offer a Yocto recipe to create packages of their software with Bitbake. Recipes are a powerful concept, and it’s one of the reasons for choosing Yocto for this project.”

Because this was the first time that they created an embedded Linux system, Oedzes and his colleagues had some questions: “How do I know that my product is good? Does my embedded Linux system do what it’s meant to do? Is it secure?” Beeliners had progressed to initial field testing with a functioning prototype, but they wanted some validation of their approach before finalizing the product.

Initially, Beeliners thought of hiring external expertise for a comprehensive evaluation. However, they wanted a quicker, lighter approach and preferred building this expertise internally, Oedzes emphasizes. “This quest for knowledge led us to explore training options. Given a prior positive experience by one of our colleagues with High Tech Institute’s ‘Good software architecture’ course, we went looking for a similar program for embedded Linux, and we found that they had one.”

''If I had enrolled in the course earlier, maybe we would have still chosen Yocto, but we would have certainly given more consideration to Buildroot.''

As Oedzes wasn’t an absolute novice in embedded Linux, he wondered whether the course was relevant for him. “We engaged in a conference call with Jasper Nuyens, the course’s trainer, who listened to our questions. He concluded that we were well on our way but had some knowledge gaps on embedded Linux basics and rules of thumb in this domain. He also reassured us of the course’s flexibility to accommodate our specific questions.” Consequently, Oedzes enrolled in the embedded Linux course.

Better decisions

While attending the embedded Linux course, Oedzes continued to benefit from Nuyens’ experience. It revealed to him that Buildroot would’ve possibly been more suitable for his use case. “If I had enrolled in the course earlier, maybe we would have still chosen Yocto, but we would have certainly given more consideration to Buildroot.”

Yocto excels in use cases where various devices each require some hardware-specific configuration as well as a common part. You can then build a Yocto project with various subconfigurations for each device to create a custom Linux image, Oedzes explains. “This is a powerful approach, but we didn’t need this for our use case: it has one device and just a couple of minor hardware revisions. Yocto wasn’t a bad choice, but in the course, I learned that Buildroot would have been a better fit.”

The course also allowed Oedzes to discuss various security aspects of his e-mobility project. “Jasper asked me the right questions, like: what problem are you trying to solve, what threats do you want to protect against, is your web interface externally accessible? It’s actually all quite logical, but I learned a lot by reasoning about our product with him.”

In retrospect, Oedzes would recommend potential participants to start earlier with the embedded Linux course than he did. “If you know that you need an embedded Linux system in your product and have some C/C++ programming experience, the course has immense value. Jasper covers various options and explains for which use cases each of them is suitable.”

Oedzes also found Nuyens’ explanation of cross-compiling software for another target architecture quite good for beginners. “Yes, figuring this out yourself is possible, but if you’re starting with embedded Linux, a course like this provides an encouraging head start and warns you about common errors.”

Even though Oedzes had previous experience with embedded Linux, the course armed him with important tips and insights. “I familiarized myself with new tools and gathered Jasper’s valuable advice about our e-mobility project. The experience of our current project coupled with the insights from this course gives me much more confidence for making better decisions for Beeliners’ future embedded Linux projects.”

This article is written by Koen Vervloesem, freelancer for Bits&Chips.

Recommendation by former participants

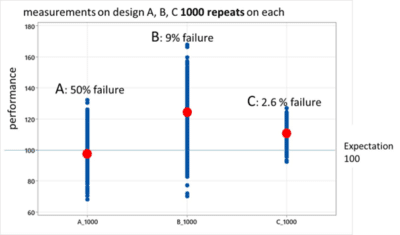

By the end of the training participants are asked to fill out an evaluation form. To the question: 'Would you recommend this training to others?' they responded with a 8.6 out of 10.