Academics who are experts in control theory often have difficulty in designing a controller for industrial practice. On the other hand, many mechatronics professionals who come into contact with control technology lack the theoretical basis to bring their systems to optimum performance. The Motion Control Tuning training offers a solution for both target groups. “Once you’ve gone all the way through it, you can design a perfect control system yourself in just a few minutes,” says course leader Tom Oomen.

How do you ensure that a probe microscope scans a sample in the right way with its nanodial needle? How can a pick and place machine put parts on a circuit board in a flash while still achieving super precision? How can a litho scanner project chip patterns at high speed and just the right position on a silicon wafer? It’s all about control engineering, about motion control.

It’s this knowledge that’s in the DNA of the Brainport region. Motion control is at the heart of accuracy and high performance. The success of Dutch high tech is partly due to the control technology knowledge built up around the city of Eindhoven in the Netherlands.

Technological developments at the Philips divisions Natlab and Centrum voor Fabricagetechnologie (CFT) made an important contribution to the development of the control technology field in the eighties and nineties. Time and time again, however, there was a hurdle to be overcome. When engineers in the product divisions started working with it, it was not so easy to convert the technology and theoretical principles that had been developed into industrial systems.

Students work with a very simple two-mass-spring-damper system.

Training courses Advanced Motion Control and Advanced Feedforward & Learning Control

That is why Philips realised in the 1990s that it had to transfer its knowledge effectively. This resulted in a course structure with a very practical approach. The short training courses of at least three days are intensive, but when participants return to work, they can apply the knowledge immediately.

Motion Control Tuning (MCT) was one of the first control courses set up at Philips CFT in the 1990s by Maarten Steinbuch, currently professor at Eindhoven University of Technology. Today, Mechatronics Academy develops and maintains the MCT training and markets it in collaboration with High Tech Institute, together with the Advanced Motion Control and Advanced Feedforward & Learning Control training courses.

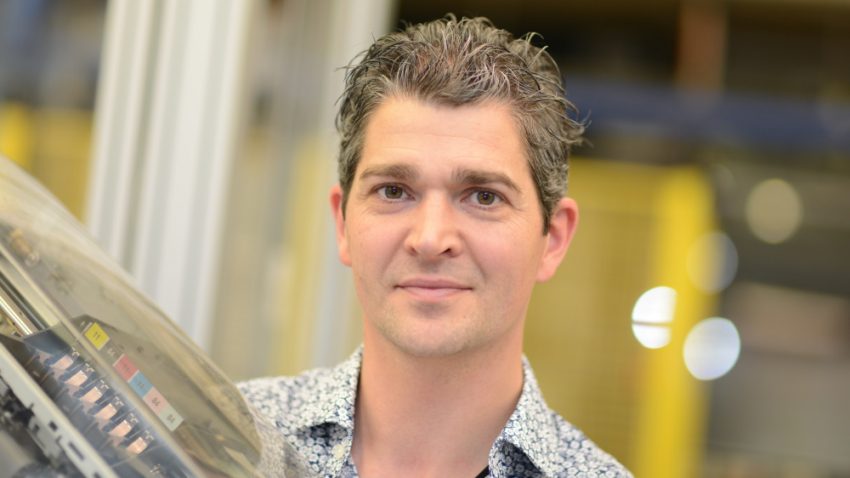

MCT trainer Tom Oomen

Tom Oomen, associate professor at Steinbuch’s section Control Systems Technology of the Faculty of Mechanical Engineering at Eindhoven University of Technology, is one of the driving forces behind these three courses. “The field is developing rapidly,” says Oomen, “which means a lot of theory, but the basis, for example how to program a PID controller, has remained the same.”

The Motion Control Tuning (MCT) training provides engineers with a solid basis. Participants are often developers with a thorough knowledge of control theory who want to apply their knowledge in practice but encounter practical obstacles. The surprising thing is that each edition always is joined by a number of international participants. It says a lot about how the world views the Dutch expertise in this field.

Motion control training students can roughly be divided into two groups. The first are people with insufficient technical background in control technology, who do have to deal with control technology on a daily basis. They want to learn the basics in order to be able to communicate better with their colleagues. “These people do design controllers, but don’t understand the techniques behind them. They make models for a controller, without knowing exactly what a controller can do. This causes communication problems between system designers and control engineers,” says Oomen.

Control engineers traditionally design a good controller on the basis of pictures, the so-called Bode and Nyquist-diagrams. “For seasoned control engineers, those diagrams are a piece of cake, but if you’ve never learned to read those figures, it’s still abracadabra. Then you can turn the knobs any way you want, but you’ll never design a good controller”, says Oomen.

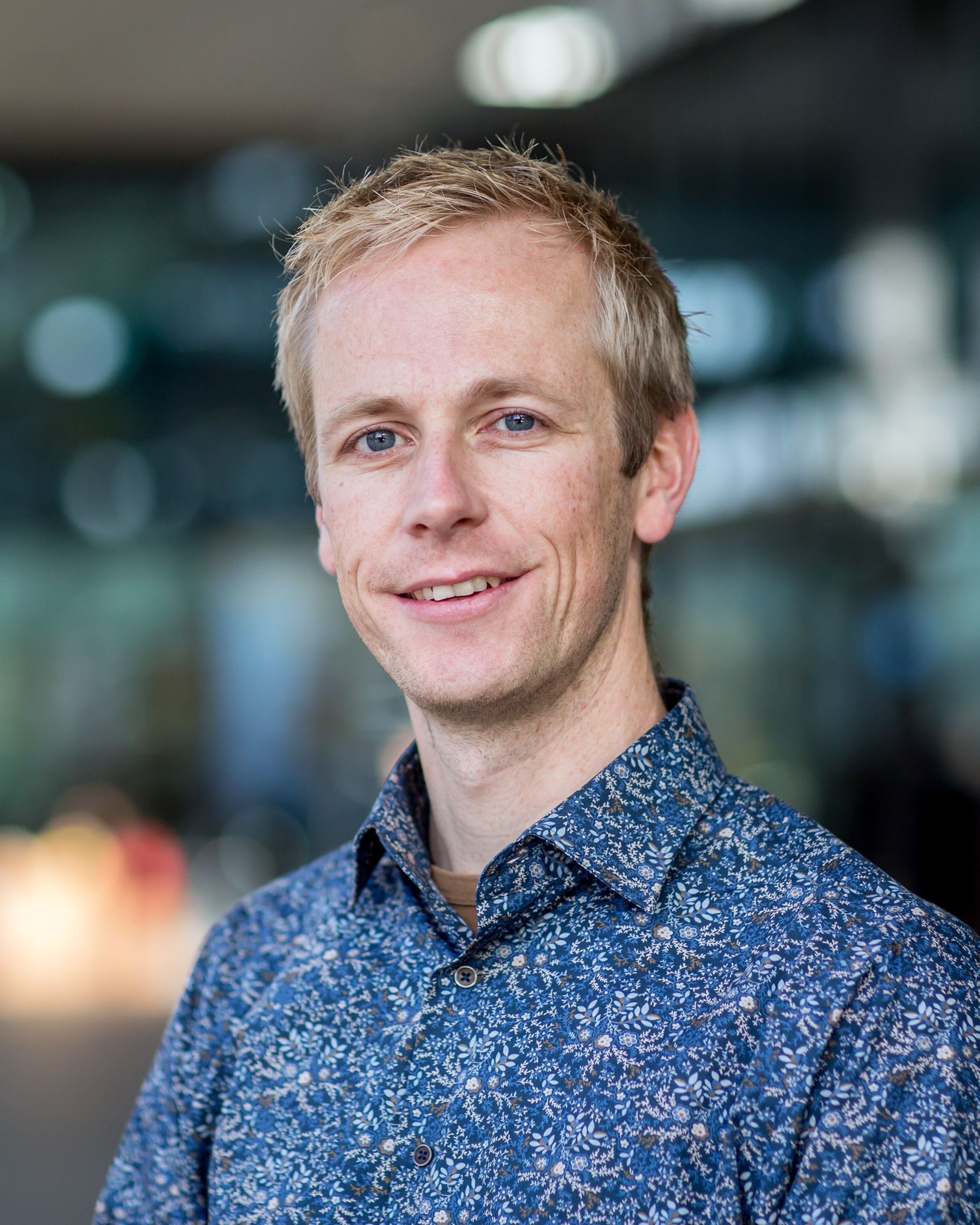

Motion Control Tuning features twenty trainers

The best way to teach the essence of the profession to people with insufficient theoretical backgrounds, according to TNO’s Gert Witvoet, is to drag them all the way through it once. Witvoet, who also serves as a part-time assistant professor at Eindhoven University of Technology, is one of the twenty trainers and supervisors involved in the MCT training. “They have to learn how to read such diagrams. They need to understand exactly what they mean. With this training you really learn how control engineers in the industry design controllers, and what the possibilities and limitations of feedback are,” says Witvoet.

The other target group consists of engineers who are theoretically prepared. They are trained in theoretical control technology and have a good background, including knowledge of the underlying mathematics. Most of them are international participants, who come to the Netherlands especially for the motion control training. “These people have moved from academia to industry but have often never designed a controller for an industrial system. They are unable to achieve a good performance with modern tools, and the ability to tune classic PID controllers is often lacking,’ says Oomen. Witvoet: “In our course they will learn the real industry practice: how to handle a motion system and come to a good design step by step.”

Tom Oomen says that he looks with ‘theorical glasses’. Witvoet is more the applications guy. Both of them think it’s cool to teach engineers how to put the knowledge from state-of-the-art research transfer it into practice.

The academic world and industry work in very different ways, although their starting point is the same: a model. Researchers and engineers, however, each choose a different approach. Academics often use physical models including underlying mathematics, differential equations and the like. But in practice, engineers work with so-called non-parametric models such as frequency response functions. “This is very different from what we work with in the scientific world and we will work with it in the training”, says Oomen.

Tom Oomen.

MCT training part one is feedback design

Motion control tuning students get started with frequency response functions on the first day. They are quick and easy to obtain and are a means to reach the goal: to design a feedback controller. They measure the properties and characteristics of an existing mechatronic system. “A frequency response function follows from these measurements, which shows how the machine behaves,” says Oomen. “Then a model rolls out, which allows you to design a controller for that system.”

In contrast to these rapidly acquired and highly accurate frequency response models, many techniques from academia build a parametric model. For that they need detailed information on masses, springs, stiffness, dampers and so on. In practice, this is far too time-consuming. It is difficult to know all the parameters exactly.

But if you have an existing system, a frequency response is a good alternative. “You offer a suitable signal and simply measure how the system reacts,” says Witvoet. “This way you get a super good frequency response function of the input-output behaviour in just a few minutes, which allows you to design a good controller. If you then also know how to tune such a thing, you can make the best controller for your system, step by step, within a few minutes.”

Students in MCT training use a simple, practical system

Students get started with a very simple two-mass-spring-damper system. One mass is connected directly to the motor, the second mass (the load) is connected to the first mass. The system has position sensors at the motor, as well as at the load. The challenge is to design a controller that controls the second mass accurately. Not easy, because the shaft is torsional.

Oomen: “In practice, systems always measure the load. Just look at a printer. Somewhere there is a motor that moves the carriage via a drive belt. Because you want to know exactly where the ink is on the paper, you measure the position of the carriage. When you measure on the engine, you never know for sure, because the transmission between the engine and the print head is flexible.”

Gert Witvoet.

Gert Witvoet.

Even seasoned researchers in the control technology sometimes have trouble understanding the stubborn practice. In their experience, everything can be modelled in detail, including the transmission between engine and load. During visits to top international groups, Oomen regularly shows the experimental set-up from the motion control tuning training to theorists. “I then ask them if it makes any difference where I measure, at the motor or the load. Starting from theoretical concepts like controllability and observability, they usually answer that it doesn’t matter”.

In the MCT course, however, the trainers show that it is essential where you measure. “If you measure over the motor, then the sky is the limit in terms of performance. Everything is possible. Malfunctions can be suppressed up to any frequency. But if you measure – as always in practice – over the load, then you are very limited, because you have to deal with unpredictable behavior due to flexible parts. Then there are significant limitations for control loops and the performance that you can actually achieve. If you want to make a stabilizing regulator under these conditions, you have to be very careful. It’s easy to get unstable behavior. If you want to know exactly what that’s like, you have to come to the course,” laughs Oomen.

Henry Nyquist and Hendrik Bode

To give a motion controller stability, classic concepts are necessary. These were devised by Henry Nyquist and Hendrik Bode. Oomen: “In the first half of the last century, Nyquist already devised principles to guarantee the stability of such a control loop. I recently read a book from 1947 in which he described this. We still use this on a daily basis, in combination with those frequency response functions. Both are deeply interwoven. In this way we guarantee the stability of control loops.”

Mention the name Nyquist, and you’re also talking about Fourier and Laplace transformations. It might sound complicated but working with mathematics in practice doesn’t require a deep understanding. “We explain these concepts in a very intuitive way that is accessible to everyone,” says Oomen. “The role of these concepts in control design forms the basis and is encountered by control engineers in their work anyway. We think it’s important that people really know it, but it’s really not necessary to go deep into mathematics for that.”

After the basic concepts, the training makes the step to stability. Witvoet: “They learn to lay a good foundation with a picture, a Nyquist diagram. This allows students to test the stability of their system. All mysticism is then gone, because they know what’s underneath and how to use it. Students will then be able to turn the knobs and check whether the closed control loop is stable.”

This is followed by the step to an actual design. The first requirement of such a design may be stability, but in the end, it is all about performance. To achieve this, students are given a wide range of motion control tools such as notch, lead, lag filters and PID controllers. “It’s all in the engineer’s toolbox and it’s the prelude to one of the most appreciated afternoons of the course – the loop-shaping game. In this game, students will tune the controller as well as possible and squeeze out the performance. If they can do that, they’ll have mastered how a feedback controller works.”

MCT training part two is feedforward controller design

In addition to the feedback controller for stability and interference suppression, each motion system also has a feedforward controller. This tells the system how to follow its path from a to b. This is also called reference tracking. “You control that with the feedforward controller,” says Oomen. “The most important part of the system’s performance comes from the feedforward control. Here, too, we briefly go into the theory and then immediately start experimenting. It is a very systematic and intuitive approach. Once you’ve done it, you can apply it immediately.”

By actually applying it, participants in the MCT training learn how things like mass feedforward and capture feedforward work. “It’s a very systematic approach that allows you to tune the parameters one by one in an optimal way,” says Oomen. “If you master that technique, you can tune the best feedforward controller for your system in just a few minutes, by doing iterative experiments.

Once you know how to measure a frequency response function and design a feedback and feedforward control, you can design controllers very quickly. Oomen: “Time is money, of course, and that’s why the entire Dutch high-tech industry does it this way. You can find it in Venlo at Canon Printing Systems and in Best at Philips Healthcare. The smaller mechatronic companies also use these techniques. At ASML in Veldhoven, almost all motion controllers in wafer scanners are tuned in this way. Once you are a little experienced, you can almost get the optimal performance out of the system. That’s within a few minutes and, of course, that’s cool.”

MCT training is 100 percent practice

When asked about the relationship between theory and practice, Oomen laughingly says that the MCT training is “100 percent practice”. “All the theory we do is essential to practice,” adds Witvoet. “We explain a number of theoretical concepts, but we do so by means of an application. It’s all about tuning. It’s really a design course and gradually one learns some theory. Every afternoon we work on that system, making frequency response functions and then fine tuning. Feedforward, feedback, it’s a daily job getting your hands dirty and your feet in the mud, because you apply the theory right away.”

After five days, participants will be able to develop a feedback and feedforward controller independently. In the final day various trainers and experts discuss the developments within their field of expertise.

Oomen: “Within the five days, participants succeed in making controllers with one input and one output, but many industrial systems have multiple inputs and outputs. That seems to have consequences for tuning.” Witvoet: “We show where the dangers lie. When things can go wrong and when things go wrong, how to deal with them.”

To design control systems for multiple inputs and outputs, motion control engineers need a stronger theoretical basis. This knowledge of multivariable systems is discussed in the five-day Advanced Motion Control training course. “In this course, participants will learn in great detail how to make control systems with multiple inputs and outputs”, says Oomen, “We will follow the same philosophy and reasoning as in the Motion Control Tuning training”.

On the last day, learning from data is also discussed, a trend that is currently growing rapidly within the control area. “The latest generations of control systems can learn from past mistakes and at the same time correct them,” says Oomen. “In doing so, we use large amounts of data produced by sensors in machines. This enables us to correct machine faults within a few iterations. This paves the way for new revolutionary machine designs that are lightweight, more accurate, less expensive and more versatile, but also allow existing machines to be upgraded in this way. On the last day of MCT, I’ll tell you about it for an hour, but in the Advanced Feedforward Control training course, we’ll take three days to do it.”

This article is written by René Raaijmakers, tech editor of High-Tech Systems.

Recommendation by former participants

By the end of the training participants are asked to fill out an evaluation form. To the question: 'Would you recommend this training to others?' they responded with a 9 out of 10.

Gert Witvoet.

Gert Witvoet.

One of the many practice rounds during the training.

One of the many practice rounds during the training. Participants are practising to give and receive feedback.

Participants are practising to give and receive feedback.